What is Context Window? How understanding context windows affects the application of generative AI

The context window is the key to whether the generative AI model can successfully handle multi-round dialogues and long text tasks. It represents the maximum number of tokens that the model can process in a single request, including the number of input, output, and internal reasoning tokens. This concept has a profound impact on model performance, cost control and application efficiency. In this article, we’ll explore how context windows work, best practices, and how to use them in conjunction with advanced prompt design strategies to maximize their effectiveness.

What is Context Window?

The context window refers to the maximum number of tokens that a generative AI model can process in a single request, covering the following three types of tokens:

- Input Tokens: A message (such as a question or instruction) provided by the user.

- Output Tokens: The response content generated by the model.

- Reasoning Tokens: Tokens consumed by the model’s internal thinking and planning process when generating a response.

For example, Claude 3 provides context windows of up to 200K tokens and can handle extremely complex and data-intensive tasks. However, once the context window limit is exceeded, the excess content may be truncated in the output.

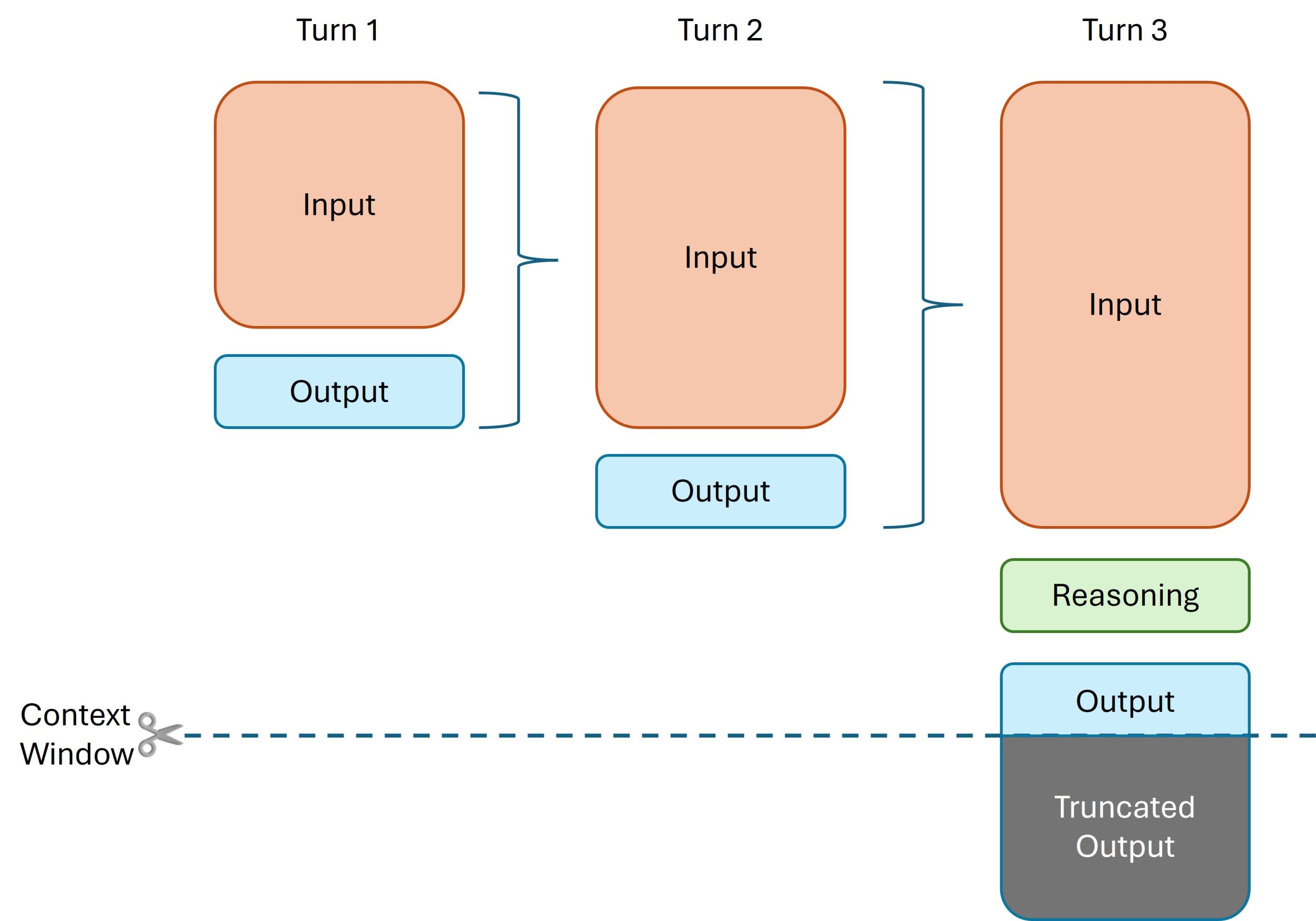

How context windows work

The context window determines the model's memory capacity when processing long text or multi-round conversations. Its operating logic is as follows:

- Token credit: The total number of input, output, and inference tokens cannot exceed the window limit.

- Truncation mechanism: When the token exceeds the context window size, the excess part will be truncated, which may affect the integrity of the output.

- Multi-stage application challenges: In multi-stage tasks, the effective use of windows is crucial and directly affects the model's ability to preserve context.

This means that when using generative AI, users need to carefully design prompt content to avoid wasting window resources.

How to influence the performance and application of generative AI

The context window affects the performance and application of generative AI in the following aspects:

- Text processing capabilities: Larger windows allow the model to process more contextual information and improve the accuracy of complex tasks.

- Calculate costs: The larger the window, the more resources the model requires to operate, so there is a trade-off between performance and cost.

- Multi-turn dialogue consistency: Long windows can help the model remember longer conversation histories and provide coherent responses.

For example, 1 million tokens can process 50,000 lines of code, 8 English novels, or more than 200 podcast transcripts, fully demonstrating the potential of generative AI in long-text applications.

Context Window Best Practices

- Streamline input content:Only keep key information to avoid wasting tokens.

- Control output length: Limit the number of words in the generated response to save window resources.

- Multi-stage processing: Use low-cost models for preliminary screening first, and then let high-performance models handle more complex parts.

To further understand the application cost of the model, it is recommended to refer toChatGPT API Fees Detailed,Claude API FeesandGemini API Fees ExplainedRelevant information provided.

Context Window Advanced Tips Design Tips

- Place long text above the prompt: Placing long text (such as 20K+ Token input) at the top of the prompt can significantly improve the accuracy of the response.

- Query placed at the end: Testing shows that placing the query at the end of the prompt can improve response quality by up to 30%.

- Structured document content: Use XML tags (such as

- Reference document content:Guide the model to prioritize relevant document parts, filter out unnecessary information and improve accuracy.

Littlepig AI model integration API

Littlepig TechnologyModel Integration APIProvides enterprises with flexible and efficient AI solutions, supporting multiple mainstream AI models (such as OpenAI's GPT series and Anthropic's Claude). Through the integrated API, users can easily switch between different models and flexibly select the most suitable tool according to the context window requirements to achieve the best balance between performance and cost.

FAQ: Context Window FAQ

Q1: How to manage the use of tokens within the context window?

Answer: Streamlining input and output, combining structured tags with multi-stage processing strategies can effectively manage tokens.

Q2: What are the advantages of long contextual prompts?

A: It can improve the model's ability to handle multiple documents and complex inputs, especially when applied to structured content and at the end of a query.

Q3: What are the differences between context windows of different models?

A: Different models support different window lengths, but overall the difference is not big. For example, Claude 3 supports 200K tokens, while the window of GPT-4o is relatively short. Users can choose other application scenarios according to their needs.

Conclusion and Viewpoints

The context window is one of the core elements for the successful application of generative AI, which directly affects the model performance and the choice of application scenarios. Users can fully tap the potential of context windows through streamlined content, advanced prompt design, and model selection. At the same time, Xiaozhu Technology's integrated API provides flexible tools to help companies manage resources efficiently and achieve a balance between performance and cost. In the application of generative AI, mastering the best practices of context windows is critical to ensure success.

Littlepig Tech is committed to building an AI and multi-cloud management platform to provide enterprises with efficient and flexible multi-cloud solutions. Our platform supports the integration of multiple mainstream cloud services (such as AWS, Google Cloud, Alibaba Cloud, etc.), and can easily connect to multiple APIs (such as ChatGPT, Claude, Gemini, Llama) to achieve intelligent deployment and automated operations. Whether it is improving IT infrastructure efficiency, optimizing costs, or accelerating business innovation, Littlepig Tech can provide professional support to help you easily cope with the challenges of multi-cloud environments. If you are looking for a reliable multi-cloud management or AI solution, Littlepig Tech is your ideal choice!

Please contact our team of experts and we will be happy to assist you. You can alwaysEmail We, private message TelegramorWhatsApp Contact us to discuss how we can support your needs

search

Classification

Label

- AI API

- AI implementation

- AWS

- CentOS

- ChatGPT

- Claude

- Debian

- DNS Acceleration

- GCP

- Gemini

- Gmail

- Google Calendar

- Google Drive

- Google Meet

- Google Workspace

- Linux

- Llama

- Server

- SSL Certificates

- Ubuntu

- VPS

- Windows

- Invoice issuance

- Team Collaboration Tools

- Domain Transfer

- Multi-cloud management

- Best Hosting for Small Business

- Digital Transformation

- Cost saving

- Domain name

- Domain Registration

- Web Hosting Comparison

- Virtual Server

- Passive Income

- Cloud Server

- Cloud Hosting

- Cloud Server

- Cloud deployment

- High commission

Traditional Chinese

Traditional Chinese Register

Register